Posts Tagged ‘Programming’

Unity Planetoid Experiments, Part 2

In the first part of this article, I covered planetoid creation and navigation. Let’s press right on into how we navigate BETWEEN the planets!

Jetpackin’

I actually approached this project as first and foremost a jetpack “simulator” (though that’s a bit of a grand term). Even before the planetoids and multiple gravity sources, the first thing I implemented was my jetpack control method.

The basic idea is that the pack operates via thrust vectoring, with one or more moving nozzles providing lateral as well as vertical thrust. If the player leaves the directional controls alone, the nozzle(s) point downwards giving vertical lift. If they point the stick forwards, they rotate backwards to drive the user in the direction they expect. Of course, you lose lift at the same time, so it becomes a balance to find enough forward speed without dropping yourself out of the sky.

The system I went with is physics-based; the player object has a non-kinematic rigidbody, and the user’s inputs are turned into forces. The first step of that is to turn them into a nozzle orientation, which is nice and simple:

// The maximum amount in degrees the nozzle can rotate on each axis

const float kMaxThrustNozzleRotation = 80.0f;

// Nozzle rotation around the X axis is driven by the left stick Y axis

// (forward/back)

float xRot = controllerAxisLeftY * kMaxThrustNozzleRotation;

// Nozzle rotation around the Z axis is driven by the left stick X axis

// (left/right)

float zRot = controllerAxisLeftX * -kMaxThrustNozzleRotation;

// Build the rotation quaternion

Quaternion rot = Quaternion.Euler(xRot, 0.0f, zRot);

// Our thrust direction becomes the up vector rotated by the

// quaternion, transformed to the player object local space

Vector3 thrustDirection = transform.TransformDirection(rot * Vector3.up);

Then all we need to do is scale thrustDirection by our input value (I use the trigger so we can apply variable amounts of thrust), and apply the thrust as a force with rigidbody.AddForce(jetpackThrust) in the FixedUpdate function.

Orbits

The planets I described in the first article were static. That’s fine, but planets and moons usually orbit others, and it would be cool to have that working.

Luckily it’s simple to do. I just added a rigid body to my planetoids, set all drag to zero, set them up with a system that applies an initial force (for movement) and torque (for spin), and put them around another gravitational body. As long as you get the initial force close to correct, they’ll fall into a nice orbit.

Ah, but what will happen if something bumps into them? Well, even if their rigid body has an extremely high mass, if the other object’s is set to kinematic it will disturb them. The planetoid will probably deorbit, with no doubt tragic consequences for all involved.

The workaround for this is to create another object with which all collisions will take place. It will shadow the planet without actually being parented (as parenting it would impart the results of any collision back to its parent’s rigid body).

Take any collider off the original planet, give one to the shadow object instead, then every update set its transform position and rotation to be the same as its owner. The timing for this operation is important; I tried LateUpdate (collider will lag by a frame) and FixedUpdate (collider won’t update smoothly) first, but Update is the magic bullet which will give you the correct position and orientation.

The effect of this is that any object will now collide with your fake shell and not the planet itself, which will remain blissfully unaffected by the otherwise catastrophic results of innocent kinematic players landing on its surface.

But hang on – why is the player using a kinematic rigid body? Didn’t we just discuss a physics-based jetpack system?

Landing on Planetoids

Those of you paying attention will note that in the last article I cautioned against the use of a force-based movement system for our character when on the ground, and yet here I am using one for my jetpack. Before I get into why I’m doing that, let’s see why using forces for character movement is a bad idea when attaching to planets in the first place.

When landing on an orbiting planetoid, how do we keep our character attached to it as it moves through space? Well, the normal thing to do is to parent our character to the object it’s just landed on. Parenting means that our character’s transformation matrix will be transformed relative to the parent’s matrix and thus all of our movement becomes local to that parent object. In effect, we’re “stuck” to it.

The problem is this: parenting non-kinematic rigid bodies together is a no-no. In fact, you shouldn’t parent non-kinematic rigid bodies to any moving object. It might seem to work most of the time, but you’ll get odd effects when you least expect them; the parent’s transform updates don’t play nice with force-based movement.

So what do we do? We actually have a few options. We could use potentially use a joint, such as a FixedJoint, which is a method of attaching rigid bodies together. However this has some differences to the standard parent-child relationship, and wouldn’t work for our needs here. Another approach would be to go to a completely kinematic solution – replacing the force-based jetpack system with a method of updating the object transform manually, which would involve keeping track of my own accumulated thrust vectors, collisions and so on. However frankly this seemed like a lot of work, and I already had a movement system that felt great which I didn’t want to wreck. So I decided to switch my character’s rigid body from kinematic to non-kinematic depending on whether it was on the ground.

A kinematic rigid body is one which doesn’t respond to forces. If you set one up by checking the Is Kinematic box in the inspector, it’s expected that you control it manually by updating its transform. So what I do is when the player is jetpacking through space, his rigid body is a standard non-kinematic one which responds to gravity and jetpack thrust. As soon as I detect a ground landing though, the rigid body is switched to kinematic and my code changes to use the movement system described in the previous article. If the player applies thrust, or if gravity from another body begins to pull him in a new direction, we switch back to non-kinematic again to allow the forces to take control.

This works great and only has one real drawback – the bouncy, slightly untethered feeling of light gravity vanishes when walking on a low-gravity planetoid. That’s because gravity isn’t actually applied when the player character is on a surface, and we rely purely on the ground-snapping from the movement code to keep us attached. That may sound bad (and it’s definitely not ideal), but it’s not actually that big of a problem for my use case. If it becomes more important, I’ll look for a solution.

One other point about parenting objects – never parent an object to another with non-uniform scale. If your objects are rotated, this will introduce shear into the child object and you’ll get very odd results, usually manifesting as a sudden and gigantic increase in scale. Avoid at all costs!

Alright! So now we can navigate between orbiting planetoids. What’s next, I wonder?

A probably very queasy astronaut goes for a ride in a busy solar system.

ConsoleX: A Unity EditorWindow example

I was wondering recently why the Unity console window is so… spare. It lacks a lot of features you’d find in most modern logging implementations. Having some time on my hands at the moment, I thought I’d look into making a replacement. It’s maybe not the sexiest project, and I’m sure there are others out there (I haven’t looked) but it seemed like a good opportunity to dig into a deeper bit of editor scripting than I’ve done in the past.

My new console window has user-configurable channels, string filtering, Unity Debug.Log capturing and reporting, saving to a file and so on. As usual, pulling this off required a significant amount of time digging around with Google in forums, answers, random blogs etc. It might benefit someone to collect all of that in one place. So I won’t talk about feature design unless it overlaps with an interesting piece of Unity lore!

Initialization

The ConsoleX class (the X standing for Xtension or possibly Xtreme depending on your current caffeine levels) is derived from EditorWindow, and the script is placed in the Editor folder. Interesting note – it doesn’t have to be “Assets/Editor”, but any subfolder of Assets called Editor works too – Assets/MySubFolder/Editor, for instance.

The window is opened by the user from a menu option, so I have an Init function tied to a MenuItem attribute:

[MenuItem("Window/ConsoleX")]

static void Init()

{

EditorWindow.GetWindow(typeof(ConsoleX));

}

Next, the window needs to initialize a few things. The best place to do that turns out to be the OnEnable function, which is called after Init whenever the window is reinitialized. This happens more often than you’d expect – the most common case is when play mode is ended.

void OnEnable()

{

LoadResources();

if (channels == null)

{

channels = new List();

SetupChannels();

}

if (logs == null)

{

logs = new List();

}

...

Notice that I’m checking for null objects in that snippet – I’ll explain why in the next section.

Serialization

When working with editor scripting, proper serialization of your objects is critical. The lack of it is the reason why, after going into and out of play mode, your shiny, incredibly useful new EditorWindow all of a sudden clears its data and starts spewing errors everywhere.

The first part of fixing this problem is of course understanding what is actually happening. It took me longer than it should have to find this essential blog post on the subject by Tim Cooper, but luckily it’s very thorough and I had my objects serializing within minutes. One thing to bear in mind that isn’t called out in that post is that static variables aren’t serialized. Public variables are serialized automatically, protected/private fields need the [SerializeField] attribute, but static vars aren’t serialized at all. That’s because serialization works on instantiated objects; static fields are not instanced. Something to keep in mind for your editor class data design.

Serialization is the reason we check for nulls in the OnEnable function – data is reserialized back into the class before OnEnable is called, so those fields may in fact be initialized with valid data at that point.

GUI issues

Laying out your EditorWindow GUI is mostly straightforward, but as soon as you want to do something a bit off the beaten path it can be tricky to get the controls looking exactly like you want them to.

You’ll probably need to use most or all of the following classes:

GUIStyle– Styles can be supplied per control, and determine exactly how the control will look.GUI– Methods to add controls manually; that is, without any automatic placement.GUILayout– Methods to add controls which are automatically positioned, and specify how they’re sized.EditorGUI– Editor-focused version of the GUI class.EditorGUILayout– Editor-focused version of the GUILayout class. This is the GUI control class I used the most.EditorGUIUtility– Less frequently-used but still vital layout controls.EditorUtility– Utility class used for a lot of miscellaneous functionality.

These classes all interrelate somewhat and you need to use most of them together. You usually won’t mix layout with non-layout classes though; in other words you’ll probably use GUI and EditorGUI together, or GUILayout and EditorGUILayout instead.

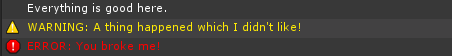

A good case study here would be my log lines themselves. I wanted to add icons before the text in some cases:

It turns out there are several ways to do that!

The first thing I tried was using an EditorGUILayout.LabelField, a function which has many different versions and has two different ways to achieve my goal: you can use a GUIContent object and populate it with both an image and text, or you can pass two labels into the function at one time, either GUIContent or plain strings.

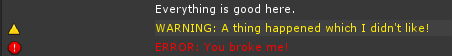

Using a single composite GUIContent object gave me a problem with long strings; the image part of the GUIContent would no longer be drawn when the text was longer than the width of the label. The second method of passing in two labels had several problems. First, you can only have one GUIStyle for both items. A pain, but in this case not a deal-breaker. Next, the gap between the first and second labels looks like this by default:

It took a LOT of searching before I found the solution to that: EditorGUIUtility has a function called LookLikeControls which allows the prefix label width to be set:

EditorGUIUtility.LookLikeControls(kIconColumnWidth);

The final problem was annoying: I’m using a ScrollArea through EditorGUILayout to hold the log messages, and for some reason using EditorGUILayout.LabelField didn’t give me a horizontal scrollbar for long strings. This can be fixed using CalcSize on the style to find the desired width of the label, and a GUILayout option to properly size it (GUILayout options can be passed into all control functions):

Vector2 textSize = labelStyle.CalcSize(textContent);

EditorGUILayout.LabelField(iconContent, textContent, labelStyle,

GUILayout.MinWidth(kIconColumnWidth + textSize.x));

So after figuring all of that out, I decided I wanted a separate GUIStyle for the icons after all, and threw most of it away! This is what I ended up with:

EditorGUIUtility.LookLikeControls(iconColumnWidth);

Rect labelRect = EditorGUILayout.BeginHorizontal();

EditorGUILayout.PrefixLabel(logs[i].logIcon, logStyle, iconStyle);

GUILayout.Label(logs[i].logText, logStyle);

EditorGUILayout.EndHorizontal();

GUILayout.Label automatically sizes the label correctly so there’s no need to manually calculate and specify the control width. And using the separate PrefixLabel call allows me to specify a unique style for the icons. Done!

Transferring data between the game and the editor

Getting data from the editor to the game is trivial – editor classes can access game classes directly. Going the other way is a little more tricky as the inverse is not true.

My first solution was to log my data into a static buffer provided by a game-side class, and use OnInspectorUpdate polling to check the buffer and pull anything new over into the ConsoleX log. This worked fine, but OnInspectorUpdate is called ten times a second and is therefore introducing unnecessary overhead.

My friend Jodon (who runs his own company Coding Jar, check him out if you need any contracting work done!) suggested using C#’s events instead of polling. This works just as well and is much more efficient. I still need a game-side class, but now I define a delegate and an event in it. The main EditorWindow ConsoleX class uses another event (EditorApplication.playmodeStateChanged) to detect when the user enters play mode, then adds its own handler function to the game-side’s event.

The game-side class, ConsoleXLog:

public class ConsoleXLog : MonoBehaviour

{

public delegate void ConsoleXEventHandler(ConsoleXLogEntry newLog);

public static event ConsoleXEventHandler ConsoleXLogAdded;

...

}

…and in the editor class ConsoleX:

void PlayStateChanged()

{

if (EditorApplication.isPlaying)

{

ConsoleXLog.ConsoleXLogAdded += LogAddedListener;

}

}

It seems to take a few frames before the event is setup. If logs come in during that time, helpfully the ConsoleXLogAdded event reports as being null, and I can check that and store the logs locally on the game side until the editor class has added its handler.

I’ve still got some things to talk about – editor resources, EditorPrefs, and more – but I’ll leave that for another post. Hope this helps someone, someday!